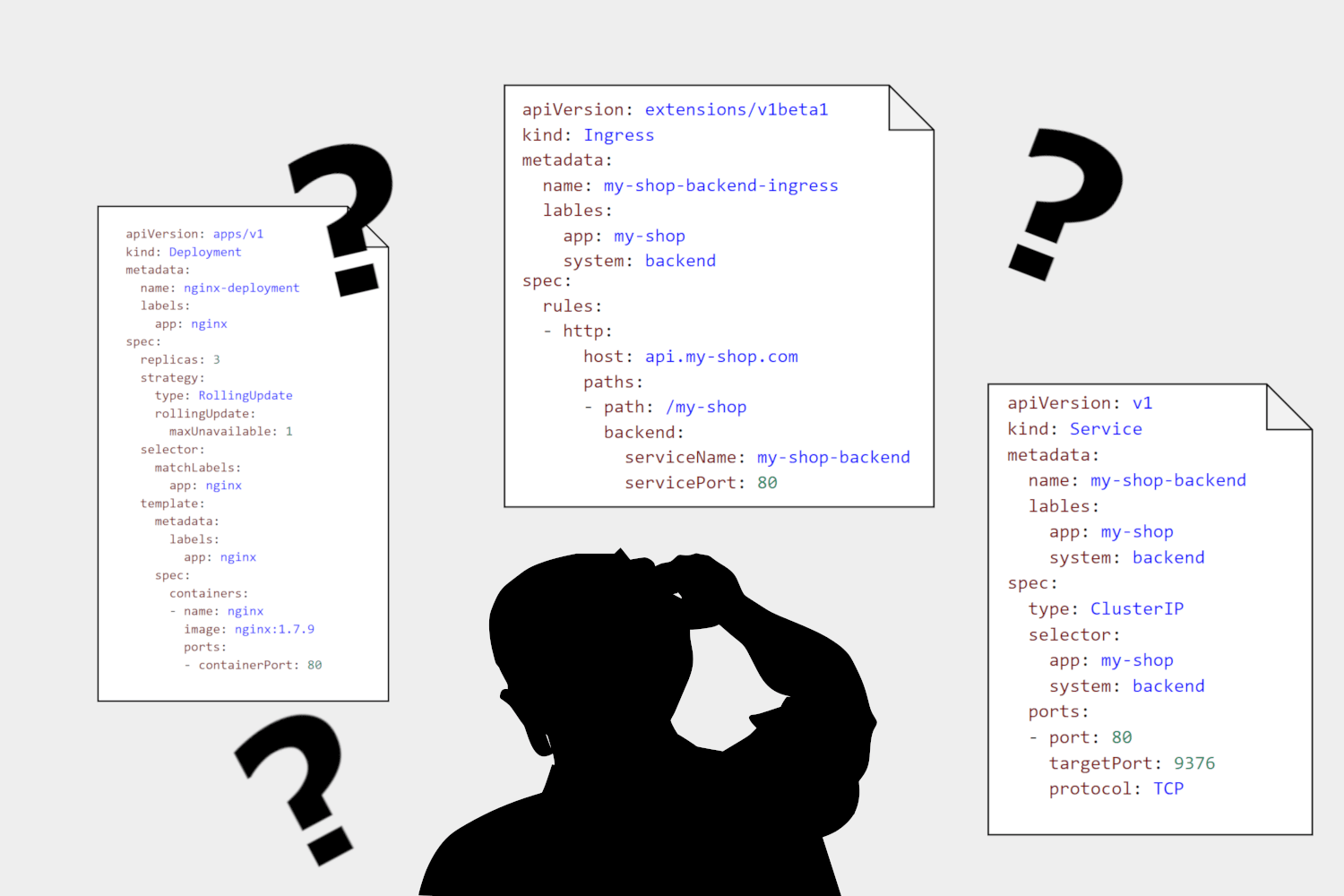

In my previous post I talked about some of the fundamental components of Kubernetes like pods, deployments, and services. In this post I'll show how you can define and configure these resources using YAML manifests. I'm not going to go into how to deploy these resources until the next post, where I'll introduce the tool Helm.

In this post I'll describe the manifests for the resources I described in the previous post: pods, deployments, services, and ingresses. I'm not going to go through all the different configuration options and permutations that you could use, I'm just going to focus on the most common sections, and the general format. In later posts in this series we'll tweak the manifests to add extra features to help deploying your ASP.NET Core applications to Kubernetes.

Defining Kubernetes objects with YAML manifests

There are several different ways to create objects in a Kubernetes cluster - some involve imperative commands, while others are declarative, and describe the desired state of your cluster.

Either way, once you come to deploying a real app, you'll likely end up working with YAML configuration files (also called manifests). Each resource type manifest (e.g. deployment, service, ingress) has a slightly different format, though there are commonalities between all of them. We'll look at the deployment manifest first, and then take a detour to discuss some of the features common to most manifests. We'll follow up by looking at a service manifest, and finally an ingress manifest.

The deployment and pod manifest

As discussed in the previous post, pods are the "smallest deployable unit" in Kubernetes. Rather than deploying a single container, you deploy a pod which may contain one or more containers. It's totally possible to deploy a standalone pod in Kubernetes, but when deploying applications, it's far more common to deploy pods as part of a "deployment" resource.

See my previous post for a description of the Kubernetes deployment resource.

For that reason, you typically define your pod resources inside a deployment manifest. That is, you don't create your pods and then create a deployment to manage them; you create a deployment which knows how to create your pods for you.

With that in mind, lets take a look at a relatively basic deployment manifest.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

I'll walk through each section of the YAML below.

Remember, YAML is whitespace-sensitive, so take care with indenting when writing your manifests!

The deployment apiVersion, kind, and metadata

The first three keys in the manifest, apiVersion, kind, and metadata, appear at the start of every Kubernetes manifest.

apiVersion: apps/v1

Each manifest defines an apiVersion to indicate the version of the Kubernetes API it is defined for. Each version of Kubernetes supports a number of different API versions for both the core resource APIs and extension APIs. Most of the time you won't need to worry about this too much, but it's worth being aware of.

kind: Deployment

The kind defines the type of resource that the manifest is for, in this case it's for a deployment. Each resource will use a slightly different format in the body of the manifest.

metadata:

name: nginx-deployment

labels:

app: nginx

The metadata provides details such as the name of the resource, as well as any labels attached to it. Labels are key-value pairs associated with the resource - I'll discuss them further in a later section. In this case, the deployment has a single label, app, with the value nginx.

The spec section

For all but the simplest resources, Kubernetes manifests will include a spec section that defines the configuration for the specified kind of resource. For the deployment manifest, the spec section defines the containers that make up a single pod, and also how those pods should be scaled and deployed.

replicas: 3

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

The replicas and strategy keys define how many instances of the pod the deployment should aim to create, and the approach it should use to create them. In this case, we've specified that we want Kubernetes to keep three pods running at any one time, and that Kubernetes should perform a rolling update when new versions are deployed.

We've also defined some configuration for the rolling update strategy, by setting maxUnavailable to 1. That means that when a new version of a deployment is released, and old pods need to replaced with new ones, the deployment will only remove a single "old" pod at a time before starting a "new" one.

As with much of Kubernetes, there's a plethora of configuration for each resource. For example, for deployments you can control how many additional containers a rolling update should add at a time (

MaxSurge), or how many seconds a container needs to be running for it to be considered available (MinReadySeconds). It's worth checking the documentation for all the options.

selector:

matchLabels:

app: nginx

The selector section of the deployment manifest is used by a deployment to know which pods it is managing. I'll discuss the interplay of metadata and selectors in the next section, so we'll move on for now.

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

The final element in the spec section of a deployment is the template. This is a "manifest-inside-a-manifest", in that its contents are effectively the manifest for a pod. So with this one deployment manifest you're defining two types of resources: a pod (which can optionally contain multiple containers, as I described previously), and a deployment to manage the scaling and lifecycle of that pod.

Technically you're also managing a

ReplicaSetresource but I consider that to mostly be an implementation detail you don't need to worry about.

The pod template in the above example defines a single container, named nginx, built from the nginx:1.7.9 Docker image. The containerPort defined here describes the port exposed inside the container: this is primarily informational, so you don't have to provide it, but it's good practice to do so.

If you wanted to deploy two containers in a pod, you would add another element to the containers list, something like the following:

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

- name: hello-world

image: debian

command: ["/bin/sh"]

args: ["-c", "echo Hello world"]

In this example our pod contains two containers: the nginx:1.7.9 image, and a (useless!) debian image configured to just echo "Hello world" to the console

We've traversed a whole deployment manifest now, but there's many more things you could configure if you wish. We'll look at some of those in later posts, but we've covered the basics.

Although each manifest kind has a slightly different spec definition, they all have certain things in common, such as the role of metadata and selectors. In the next section I'll cover how and why those are used, as it's an important concept.

The role of labels and selectors in Kubernetes

Labels are a simple concept that are used extensively throughout Kubernetes. They're key-value pairs associated with an object. Each object can have many different labels, but each key can only be specified once.

Taking the previous example manifest, the section:

metadata:

name: nginx-deployment

labels:

app: nginx

adds the key app to the deployment object, with a value of nginx. Similarly, in the spec.template section of the deployment (where you're defining the pod manifest):

spec:

template:

metadata:

labels:

app: nginx

This adds the same key-value pair to the pod. Both of these examples only added a single label, but you can attach any number. For example, you could tag objects with an environment label to signify dev/staging/production, and a system label to indicate frontend/backend

metadata:

labels:

app: nginx

environment: staging

system: frontend

Labels can be useful when you want to use the command line to query your cluster with the command line program kubectl. For example, you could use the following to list all the pods that have both the frontend and staging labels:

kubectl get pods -l environment=staging,system=frontend

Labels are mostly informational so you can sprinkle them liberally as you see fit. But they are also used integrally by Kubernetes wherever a resource needs to reference other resources. For example, the deployment resource described earlier needs to know how to recognise the pods it is managing. It does so by defining a selector.

selector:

matchLabels:

app: nginx

A selector defines a number of conditions that must be satisfied for an object to be considered a match. For example, the selector section above (taken from the deployment resource) will match all pods that have the app label and have a value of nginx.

The selector version of the kubectl query above would look like the following

selector:

matchLabels:

environment: staging

system: frontend

Note that for a pod to be a match, it would have to have all of the listed labels (i.e. the labels are combined using AND operators). Therefore, your selectors may well specify fewer labels than are added to your pods - you only need to include enough matchLabels such that your pods can be matched unambiguously.

Some resources also support

matchExpressionsthat let you use set-based operators such asIn,NotIn,Exists, andDoesNotExist.

This section seems excessively long for a relatively simple concept, but selectors are one of the places where I've messed up our manifests. By using an overly-specific selector, I found I couldn't upgrade our apps as the new version of a deployment would no longer match the pods. I realise that's a bit vague, but just think carefully about what you're selecting! 🙂

Deployments aren't the only resources that use selectors. Services use the same mechanism to define which pods they pass traffic to.

The service manifest

In my previous-post I described services as "in-cluster load balancers". Each pod is assigned its own IP address, but pods can be started, stopped, or could crash at any point. Instead of making requests to pods directly, you can make requests to a service instead, which forwards the request to one of its associated pods.

Due to the fact that services deal with networking, I find them one of the trickiest resources to work with in Kubernetes, as configuring anything beyond the basics seems to require a thorough knowledge of networking in general. Luckily, in most cases the basics gets you a long way!

The following manifest is for a service that acts as a load balancer for the pods that make up the back-end of an application called "my-shop". As before, I'll walk through each section of the manifest below:

apiVersion: v1

kind: Service

metadata:

name: my-shop-backend

labels:

app: my-shop

system: backend

spec:

type: ClusterIP

selector:

app: my-shop

system: backend

ports:

- port: 80

targetPort: 8080

protocol: TCP

The service apiVersion, kind, and metadata

As always, the manifest starts by defining the kind of manifest (Service) and the version of the Kubernetes API required. The metadata assigns a name to the service, and adds two labels: app:my-shop and system:backend. As before, these labels are primarily informational.

The service spec

The spec section of the manifest starts by defining the type of service we need. Depending on the version of Kubernetes you're using, you'll have up to four options:

- ClusterIP. The default value, this makes the service only reachable from inside the cluster.

- NodePort. Exposes the service publicly on each node at an auto-generated port.

- LoadBalancer. Hooks into cloud providers like AWS, GCP, and Azure to create a load balancer which is used to handle the load balancing.

- ExternalName Works differently to other services, in that it does not proxy or forward directly to any pods. Instead it is used to map an internal DNS service name to another DNS CNAME.

If you're confused, don't worry. For ASP.NET Core applications, you can commonly use the default ClusterIP to act as an "internal" load-balancer, and use an ingress to route external HTTP traffic to the service.

spec:

selector:

app: my-shop

system: backend

Next in the spec section is a selector. This is how you control which pods a service is load balancing. As described in the previous section, the selector will select all pods that have all of the required labels, so in this case app:my-shop and system:backend. The service will route any incoming requests to those services.

spec:

ports:

- port: 80

targetPort: 8080

protocol: TCP

Finally, we come to the ports section of the spec. This defines the port that the service will be exposed on (port 80) and the IP Protocol to use (TCP in this case, but could be UDP for example). The targetPort is the port on the pods that traffic should be routed to (8080). For details on other option available here, see the documentation or API reference.

For ASP.NET Core applications, you typically want to expose some sort of HTTP service to the wider internet. Deployments handle replication and scaling of your app's pods, and services provide internal load-balancing between them, but you need an ingress to expose your application to traffic outside your Kubernetes cluster.

The ingress manifest

As described in my last post, an ingress acts as an external load-balancer or reverse-proxy for your internal Kubernetes service. The following manifest shows how you could expose the my-shop-backend service publicly:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: my-shop-backend-ingress

labels:

app: my-shop

system: backend

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

host: api.my-shop.com

paths:

- path: /my-shop

backend:

serviceName: my-shop-backend

servicePort: 80

The ingress apiVersion, kind, and metadata

As always, the manifest contains an API version and a kind for the manifest. The metadata also includes a name for the ingress, and labels associated with the object. However we also have a new element, annotations. Annotations work very similarly to labels, in that they are key-value pairs of data. The main difference is that annotations can't be used for selecting objects.

In this case, we've added the annotation nginx.ingress.kubernetes.io/rewrite-target with a value of /. This annotation uses a special key that is understood by the NGINX ingress controller which tells the controller it should rewrite the "matched-path" of incoming requests to be /.

For example, if a request is received at the following path:

/my-shop/orders/123

the ingress controller will rewrite it to

/orders/123

by stripping the /my-shop segment defined in the spec below.

In order to use an ingress, your cluster needs to have an ingress controller deployed. Each ingress controller has slightly different features, and will require different annotations to configure it. Your ingress manifests will therefore likely differ slightly depending on the specific ingress controller you're using.

The ingress spec

The spec section of an ingress manifest contains all the rules required to configure the reverse-proxy managed by the cluster's ingress controller. If you've ever used NGINX, HAProxy, or even IIS, an ingress is fundamentally quite similar. It defines a set of rules that should be applied to incoming requests to decide where to route them.

Each ingress manifest can define multiple rules, but bear in mind the "deployable units" of your application. You will probably include a single ingress for each application you deploy to Kubernetes, even though you could technically use a single ingress across all of your applications. The ingress controller will handle merging your multiple ingresses into a single NGINX (for example) config.

spec:

rules:

- http:

host: api.my-shop.com

paths:

- path: /my-shop

backend:

serviceName: my-shop-backend

servicePort: 80

An http rule can optionally define a host (api.my-shop.com in this case) if you need to run different applications in a cluster at the same path, and differentiate based on hostname. Otherwise, you define the match-path to use (/my-shop). It is this match-path which is re-written to / based on the annotation in the metadata section.

As well as the path and host to match incoming requests, you define the internal service that requests should be forwarded to, and the port to use. In this case we're routing to the my-shop-backend service defined in the previous section, using the same port (80).

This simple example matches incoming requests to a single service, and is probably the most common ingress I've used. However in some cases you might have two different types of pod that make up one logical "micro-service". In that case, you would also have two Kubernetes services, and could use path based routing to both services inside a single ingress, e.g.:

spec:

rules:

- http:

paths:

- path: /orders

backend:

serviceName: my-shop-orders-service

servicePort: 80

- http:

paths:

- path: /history

backend:

serviceName: my-shop-order-history-backend

servicePort: 80

As always in Kubernetes, there's a multitude of other configuration you can add to your ingresses, and if you're having issues it's worth consulting the documentation. However if you're deploying apps to an existing Kubernetes cluster, this is probably most of what you need to know. The main thing to be aware of is which ingress controller you're using in your cluster.

Summary

In this post I walked through each of the main Kubernetes resources that app-developers should know about: pods, deployments, services, and ingresses. For each resource I showed an example YAML configuration file that can be used to deploy the resource, and described some of the most important configuration values. I also discussed the importance of labels and selectors for how Kubernetes links resources together.

In the next post, I'll show how to manage the complexity of all these manifests by using Helm charts, and how that can simplify deploying applications to a Kubernetes cluster.